Simulated brain networks learn faster if their individual cells have the benefit of diversity. This finding comes from a study by scientists at Imperial College London. They constructed virtual brains that wired together identical brain cells, and virtual brains that wired together different kinds of brain cells—that is, brain cells that had different electrical properties.

When the scientists had the different brains complete a learning task, they observed that brains characterized by greater heterogeneity performed better than brains characterized by homogeneity. Specifically, “heterogenous” brains learned faster and were more energy efficient than “homogeneous” brains.

The scientists say that their findings could teach us about why our brains are so good at learning, and might also help us to build better artificially intelligent systems, such as digital assistants that can recognize voices and faces, or self-driving car technology.

“Evolution has given us incredible brain functions—most of which we are only just beginning to understand,” said Dan Goodman, PhD, a senior lecturer at Imperial’s Department of Electrical and Electronic Engineering. “Our research suggests that we can learn vital lessons from our own biology to make AI work better for us.”

Goodman is the senior author of a paper that reported the new findings. The paper, which is titled, “Neural heterogeneity promotes robust learning,” appeared October 4 in Nature Communications.

“Learning with heterogeneity was more stable and robust, particularly for tasks with a rich temporal structure,” the article’s authors wrote. “In addition, the distribution of neuronal parameters in the trained networks is similar to those observed experimentally. We suggest that the heterogeneity observed in the brain may be more than just the byproduct of noisy processes, but rather may serve an active and important role in allowing animals to learn in changing environments.”

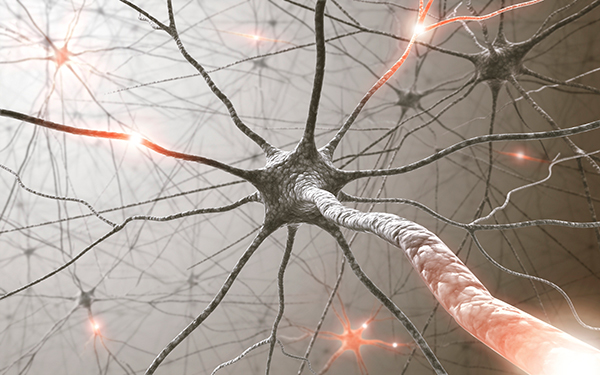

The brain is made up of billions of cells called neurons, which are connected by vast “neural networks” that allow us to learn about the world. Neurons are like snowflakes: they look the same from a distance but on further inspection it’s clear that no two are exactly alike.

By contrast, each cell in an artificial neural network—the technology on which AI is based—is identical, with only their connectivity varying. Despite the speed at which AI technology is advancing, their neural networks do not learn as accurately or quickly as the human brain, and the researchers wondered if their lack of cell variability might be a culprit.

They set out to study whether emulating the brain by varying neural network cell properties could boost learning in AI. They found that the variability in the cells improved their learning and reduced energy consumption.

To carry out the study, the researchers focused on tweaking the “time constant” – that is, how quickly each cell decides what it wants to do based on what the cells connected to it are doing. Some cells will decide very quickly, looking only at what the connected cells have just done. Other cells will be slower to react, basing their decision on what other cells have been doing for a while.

After varying the cells’ time constants, they tasked the network with performing some benchmark machine learning tasks: to classify images of clothing and handwritten digits; to recognize human gestures; and to identify spoken digits and commands.

The results show that by allowing the network to combine slow and fast information, it was better able to solve tasks in more complicated, real-world settings.

When they changed the amount of variability in the simulated networks, they found that the ones that performed best matched the amount of variability seen in the brain, suggesting that the brain may have evolved to have just the right amount of variability for optimal learning.

The study’s first author, Nicolas Perez, a PhD student at Imperial College London’s Department of Electrical and Electronic Engineering, said: “The brain needs to be energy efficient while still being able to excel at solving complex tasks. Our work suggests that having a diversity of neurons in both brains and AI systems fulfills both these requirements and could boost learning.

“We demonstrated that AI can be brought closer to how our brains work by emulating certain brain properties. However, current AI systems are far from achieving the level of energy efficiency that we find in biological systems. Next, we will look at how to reduce the energy consumption of these networks to get AI networks closer to performing as efficiently as the brain.”