Researchers at the National Institute of Standards and Technology (NIST) say they have now found a way to significantly enhance the accuracy of key information on how heat affects the stability of folded DNA structures (DNA origami). Scientists for a number of years have been working on meticulously assembling hundreds of strands of DNA to build a collection of structures that could include drug delivery containers, biosensors, and other biocompatible devices.

The team published its study “Analysis and uncertainty quantification of DNA fluorescence melt data: Applications of affine transformations” in Analytical Biochemistry.

To function reliably, these structures, only a few tens of nanometers in length, must be carefully shaped in order, for example, to deliver drugs to specific targets. But the hydrogen bonds that bind pieces of DNA together to form the double helix depend on both temperature and their local environment.

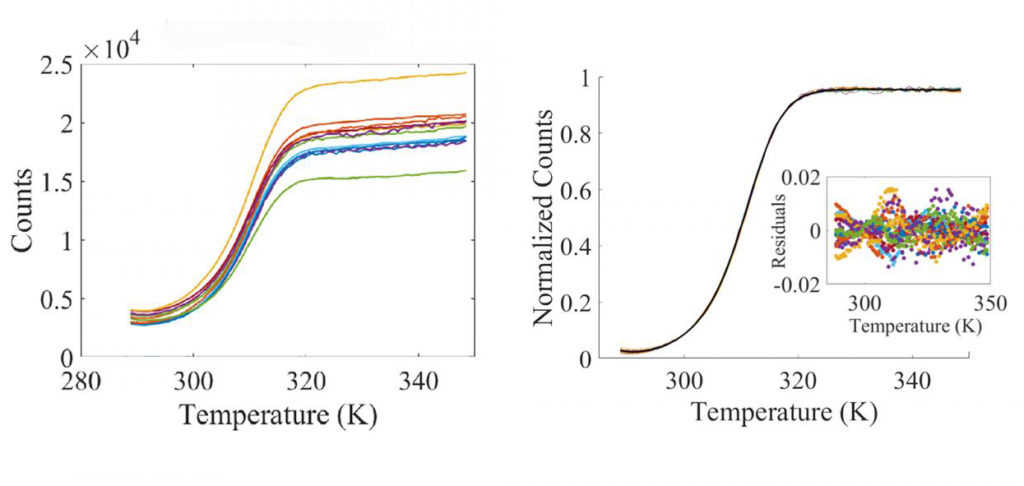

To determine how different strands of DNA react to changes in temperature, researchers rely on a series of measurements that form a DNA melt curve graph which reveals, for instance, the temperature at which half the strand has melted, or unraveled. It also shows the amount of heat the strands must absorb to raise their temperature by one degree C. By revealing these and other thermal properties of the strands, the curve provides scientists with the knowledge to design durable, more complex structures made from DNA.

The NIST researchers have designed a mathematical algorithm that reportedly automatically accounts for these unknown effects, allowing scientists to reap the full benefits of the melt curve.

But given the minute amounts of DNA in the experiments that was difficult to do in practice. So Majikes and Liddle reached out to a NIST mathematician, Anthony Kearsley, PhD, and his collaborator, NIST physicist Paul Patrone, PhD, in the hopes of finding a mathematical solution.

The true DNA melt curve is hidden in every set of measurements; the challenge was to find a way to reveal it. No known mathematical theory fully describes the melt curve, so Kearsley and Patrone had to figure out a way to remove the uncertainties in the melt curve using the experimental data alone.

In searching for an algorithm that would solve this problem, the team recognized that the distortions to the true DNA melt curves were behaving in a straightforward manner. That is, the distortions were akin to a special kind of funhouse mirror—one that preserved the relative spacing between data points even as it contracted or expanded the curve, and that allowed parallel lines to remain parallel. To try and correct those effects, the scientists applied a mathematical tool known as an affine transformation.

“Fluorescence-based measurements are a standard tool for characterizing the thermodynamic properties of DNA systems. Nonetheless, experimental melt data obtained from polymerase chain-reaction (PCR) machines (for example) often leads to signals that vary significantly between datasets. In many cases, this lack of reproducibility has led to difficulties in analyzing results and computing reasonable uncertainty estimates,” write the investigators.

“To address this problem, we propose a data analysis procedure based on constrained, convex optimization of affine transformations, which can determine when and how melt curves collapse onto one another. A key aspect of this approach is its ability to provide a reproducible and more objective measure of whether a collection of datasets yields a consistent “universal” signal according to an appropriate model of the raw signals.

“Importantly, integrating this validation step into the analysis hardens the measurement protocol by allowing one to identify experimental conditions and/or modeling assumptions that may corrupt a measurement. Moreover, this robustness facilitates extraction of thermodynamic information at no additional cost in experimental time.”

“We illustrate and test our approach on experiments of Förster resonance energy transfer (FRET) pairs used study the thermodynamics of DNA loops.”

Kearsley and Patrone were looking for a particular affine transformation, i.e., one that made each dataset conform to all the others, so that they would essentially look the same. But to find that transformation, using a technique known as constrained optimization, the scientists had to step away from the blackboard and immerse themselves in the mechanics of the DNA laboratory.

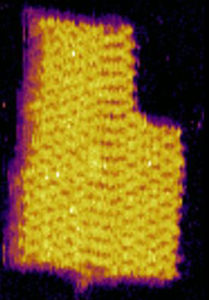

Neither Kearsley nor Patrone had even heard of DNA origami, let alone the measurements required to assemble the melt curve. They asked dozens of questions about each component of the nanoscale experiment, determining which parts were important to model and which were irrelevant. After weeks of theoretical calculations, Patrone got his first chance to view the actual experiment. He viewed in amazement the laboratory setup, with its 8×12 array of 96 tiny wells, each containing exactly the same sequence of DNA from which Majikes and Liddle had recorded 96 different DNA melt curves.

Kearsley and Patrone fleshed out the optimization problem they thought would work best to remove the errors. Then they applied the algorithm to each of the 96 curves and watched what happened.

On a computer screen, the multitude of curves, distorted in different ways, became indistinguishable, each tracing out the same shape, height, and endpoints. The 96 curves had collapsed to become a single DNA melt curve.

“We were convinced we had solved the problem,” said Kearsley.

Scientists have used DNA origami to fabricate nanorobots that perform computing operations and pre-programmed tasks inside living organisms. They have also relied on DNA origami to create miniature drug delivery containers that open only when they identify and attach to infected cells.

The team is now alerting researchers who perform DNA origami that it is possible to accurately measure the melt curve and guide the growth of DNA origami structures. Just as importantly, said Patrone, the same technique could be applied to other biophysical problems in which the true data is obscured by similar types of errors. The researchers are studying how to improve the accuracy of experiments in which human cells flow through tiny cancer-hunting detectors.