Proteins, we hear you loud and clear. A group of researchers from Massachusetts Institute of Technology (MIT) devised an artificial-intelligence (AI)-based approach to translate each of the 20 amino acids into audible sound—even building a downloadable phone app to play each one—all with the idea that music could be a medium to better understand protein structure and possibly lead to the invention of new protein designs.1

“We’re interested in building models of materials based on proteins,” says senior author Markus Buehler, PhD, the McAfee Professor of Engineering and head of the department of civil and environmental engineering at MIT, during an interview with GEN. “And we’re always trying to find new ways.”

Typically, he explains, the models are based on molecules and atoms and chemistry—that is, more conventional methods—but then he and his colleagues got to thinking about different ways of representing matter. Given that at the nanoscale, things always move and vibrate, they thought, “Maybe there’s a way of describing matter, uniquely, by looking at their vibrational spectra,” he says. So they combined that concept with the general understanding that proteins, like music, have a hierarchical structure. “We thought there might be some synergies of putting these things together,” he says.

With a plan formed, the MIT researchers took the natural vibrational frequencies of the amino acids, which are inaudible to the human ear, and adhering to the musical concept of transpositional equivalence, they used a computer algorithm to transpose each one into a unique audible sound that fell on the frequency spectrum of 20 to 20,000 Hz—that is, frequencies that the human ear can detect.

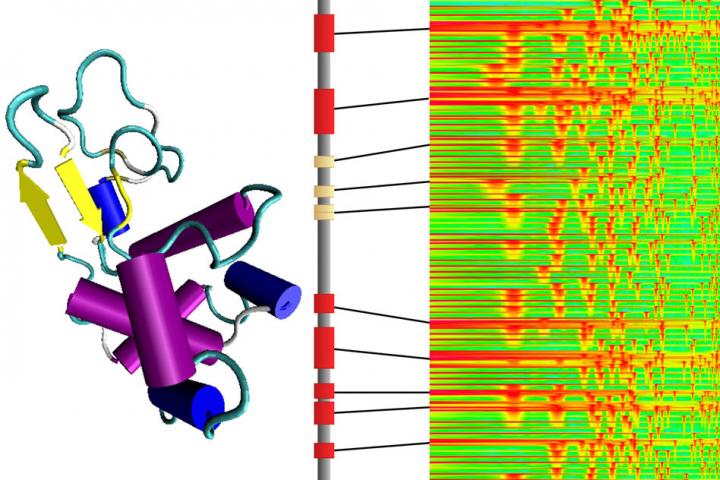

The result was a 20-tone amino acid scale, and the sound created for each amino acid was not that of a single frequency but a cluster of frequencies, much like a musical chord, which is the playing of several musical notes simultaneously. The frequency spectrum of the amino acids were then mapped onto a piano keyboard, creating a musical score that could be played. And given that proteins are not one-dimensional, the researchers also incorporated volume and length of the tone to reflect secondary structures and give the musical composition some rhythm.

To engage the public, the MIT researcher even released a phone app, dubbed Amino Acid Synthesizer, in which users can listen to each of the 20 amino acids and string them together to make playable sequences. Already, more than a thousand downloads have been made.

Of course, this is not the first time that scientists have turned to music to solve a problem. In fact, science has a history of translating visually represented scientific data into sound, often with the goal of creating an effective teaching tool or helping the visually impaired. For example, Victor Wong, a blind graduate student studying meteorology at Cornell University, decided to create a computer program that translated the gradient of red and blue colors on a weather map into more than 80 piano notes, allowing him to hear the colors.2

The translation of amino acids into music specifically is also a concept scientists have been toying with for years, and often the approach involves mapping amino acids onto classical Western musical scales. For example, in a 2007 paper, UCLA research assistant Rie Takahashi and molecular genetics professor Jeffrey H. Miller devised a reduced, 13-base note range scale in which each amino acid was assigned a three-note chord and used a computer algorithm to convert protein sequences into music notes. Thymidylate synthase A served as their pilot protein and when they applied the approach, it sang.3

The fundamental difference, Buehler explains, between prior approaches and his team’s is that theirs uses the actual vibrations and structure of molecules to generate sound. Not to mention there’s also the novel use of AI.

Putting the art in artificial intelligence

Because the human brain has limited ability to decipher the subtle sound differences among the sonified amino acids, the MIT researchers turned to AI as a way of learning how the different structures and functions of proteins are actually encoded in the sound. Put another way, they let AI be their translator.

“The AI allowed us—and this was the major enabler of this work—to do this translation fully automatically using a computational model,” says Buehler. He explains that when they tried to study relationships between music and proteins in past work, they had used mathematical tools, but the process was largely done with pen and paper and was “very tedious” and “very difficult.”

“The authors were fairly sober in their recognition that protein-sequence-derived music might not be very meaningful for humans to listen to,” Shawn Douglas, PhD, assistant professor, UCSF department of cellular & molecular pharmacology tells GEN. “They cleverly reframed the problem by writing music for computers to listen to instead of human listeners.”

The MIT researchers trained a neural network by feeding it sound representations of many different proteins from a large database, and the neural network learned the various properties of each protein: secondary structure, function, whether it’s an enzyme, and so on. “What the AI learns is basically the language of how this protein is constructed both genetically but also functionally,” says Buehler.

Once the model was trained, the researchers then asked it to translate the musical sounds back into a protein sequence and even generated new proteins with certain characteristics, like being alpha helix rich or beta sheet rich. “We can translate these [sounds] back into an amino acid sequence and then thereby design a new protein,” says Buehler. He notes they are not actually generating a new protein through this method, just the new protein design. “One of the interesting things about this method is basically that you can design a material not by actually designing the position of the atoms and molecules, but actually designing the sound,” says Buehler.

Douglas, however, expressed some skepticism about the utility of the approach. “It’s still unclear if this approach will be useful in understanding or engineering protein structures,” he says. “One question is whether music is the best intermediate representation if computer algorithms are doing the listening and interpretation.”

“It will be interesting to see if the authors will be able to demonstrate utility in a follow-up work,” Douglas says. “They’ve used natural protein sequence compositions to train a neural network, which in principle can generate novel compositions which could be translated back into protein sequences and tested experimentally.”

Not bad, for a protein

The MIT researchers also took the AI model for a test run with a handful of proteins—lysozyme, myoglobin, β-barrel, a silk protein, amyloid protein—and translated their structures into musical tunes, featuring a 21-bar length musical composition of lysozyme. “It’s an extremely complex piece,” says Wolynes when he looked at the musical score. The study researchers explain that the purpose of showing the musical score was to illustrate the timing, rhythm, and progression of notes and that they do not reflect a conventional musical scale.

Douglas listened to musical products, commenting, “The audio files they generated from protein sequences were surprisingly not that bad to listen to, though I’m not sure I’d play their ‘sonified’ proteins at a party.”

References

1. Yu C-H, Qin Z, Martin-Martinez FJ, and Buehler MJ. A self-consistent sonification method to translate amino acid sequences into musical compositions and application in protein design using artificial intelligence [published online June 26, 2019]. ACS Nano. doi: 10.1021/acsnano.9b02180

2. Oberst T. Blind graduate student ‘reads’ maps using CU software that converts color into sound. Cornell Chronicle. 2005;36:5.

3. Takahashi, R and Miller, JH. Conversion of amino-acid sequence in proteins to classical music: Search for auditory patterns. Genome Biol. 2007;8:405.