On April 14, 2003, the National Institutes of Health issued a press release announcing that the Human Genome Project had been completed ahead of schedule and under budget. At the time, the human genome was considered, to all intents and purposes, complete. Almost 16 years later, as of the writing of this article, www.genome.gov, a website run by the National Human Genome Research Institute, responded in the affirmative to the question, “Is the human genome completely sequenced?” The “Yes,” it must be said, was followed by a hedged statement: “Within the limits of today’s technology, the human genome is as complete as it can be.”

Over the years, it has become increasingly evident that the reference genome is not the pristine, essentially complete human genome sequence one might believe. Researchers such as George Church, PhD, professor of genetics at Harvard Medical School, have pointed out that stubborn hard-to-sequence portions of the human genome almost certainly contain medically relevant genes. Also, a report published in early 2018 used nanopore sequencing to define the previously uncharted DNA from the centromere of the human Y chromosome. But the biggest surprise was published late last year.

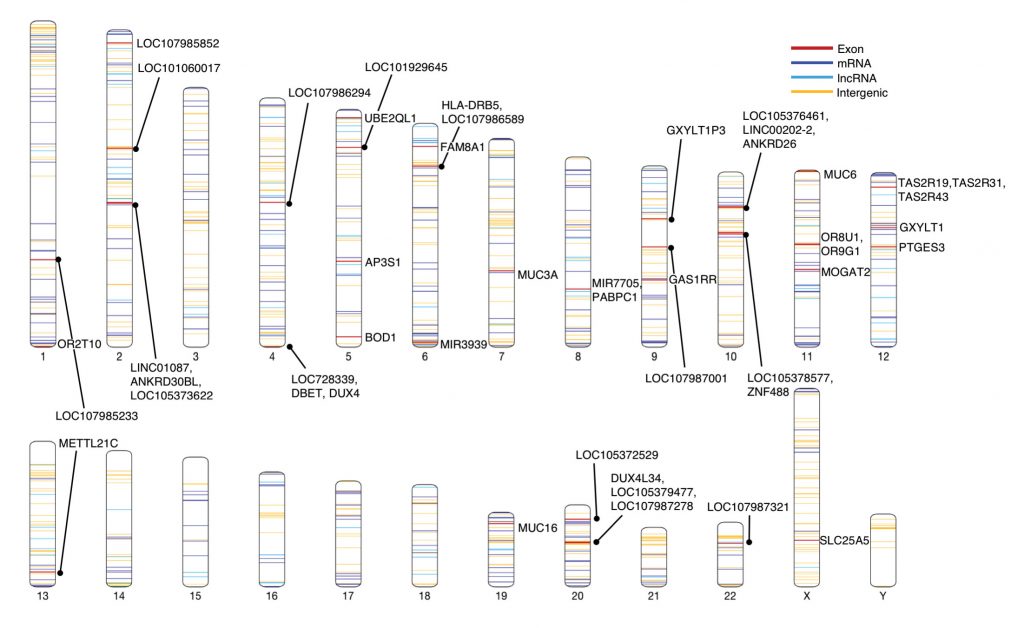

A group led by Steven L. Salzberg, PhD, professor of biomedical engineering, computer science, and biostatistics at Johns Hopkins University select JHU) Medical School, reported in Nature Genetics that a trove of DNA sequence information is missing from the reference genome. The group’s analysis of a dataset of 910 individuals of African descent revealed that the reference genome omits roughly 300 million base pairs select or megabases, Mb)—almost 10% of the entire reference genome.

The utility of the reference genome in advancing genomics over the past 15 years is not in question. To think that its purpose is simply for reanalyzing other genomes is myopic. It is the coordinate system that is used for annotation. It has enabled rare disease research and furthered genome sequencing and assembly work. In addition, large-scale genomic inventories, such as ENCODE select the Encyclopedia of DNA Elements, a public research effort that has identified a wealth of functional elements in the human and mouse genomes) and the 1000 Genomes Project select an international research effort to establish the most detailed catalog of human genetic variation union would have been impossible without the human reference genome.

The missing DNA

Salzberg and colleagues, led by graduate student Rachel Sherman, began their project by examining DNA that had been collected from 910 individuals by the Consortium on Asthma among African-Ancestry Populations in the Americas select CAAPA). Salzberg tells GEN that he had been looking for a unique resource like CAAPA and was lucky that his colleague Kathleen Barnes, PhD, who moved to the University of Colorado Anschutz Medical Campus after 23 years at JHU, was studying asthma and allergy in the African population where those conditions appear at a higher background rate.

The CAAPA dataset contains 1000 genomes, each with more than 1 billion reads, collected from people who live around the world, including the United States, Central Africa, and the Caribbean. It is well known that African populations—comprising more than 2,000 distinct ethno-linguistic groups—exhibit a greater degree of genetic diversity than non-African populations.

herman aligned a total of 1.19 trillion reads from the CAAPA individuals to the reference genome select GRCh38) to construct what she calls a pan-African genome. The results were published in the November 2018 Nature Genetics paper, “Assembly of a pan-genome from deep sequencing of 910 humans of African descent.” Most of the novel DNA fragments were 1000–5000 base pairs long, with the largest chunk being 152,000-base-pairs long.

However, both the functional significance of the sequences and their locations remain mostly unknown.

Some sequences had their locations determined. These sequences, Sherman tells GEN, appeared to be fairly randomly distributed, and inserted sequences were found in 315 genes. Sherman adds that a good portion of the sequences are likely to be in the centromeres and telomeres because those regions are less well represented in the reference genome than other sequence.

Salzberg is no stranger when it comes to human genome analysis. From 1997 to 2005, he was on the faculty of The Institute for Genomics Research select TIGR union the nonprofit set up by J. Craig Venter, PhD, and was a co-author on the Celera Genomics draft human genome report in 2001. But Salzberg was astounded by the main result of his latest study. He had predicted his team would uncover perhaps 8–40 Mb of novel DNA, but never expected to find 300 Mb. Of course, Salzberg’s team considered the presence of contamination and has reanalyzed the data in multiple ways to ensure that this is a bona fide result.

According to Deanna M. Church, PhD, currently senior director of mammalian applications at Inscripta, who worked for more than 10 years at the National Center for Biotechnology Information and was closely involved in building the human reference genome, the identification of novel human genome sequences not seen in the reference is not so surprising. Although Salzberg used short-read sequencing—specifically, Illumina sequencing—other researchers have used other approaches, such as long-read technology, to describe novel sequences.

The “300 Mb” figure needs to be taken with caution, Church says, because the technical issues that accompany assembly methods in short-read technology cannot distinguish between haplotypes in the donor samples. As a result, sequences might be created that don’t exist in the population because the haplotypes get mixed in ways that don’t make sense. When that happens, technical artifacts are created that don’t align with the reference. That is, some of the sequences that are classified as novel might instead be located in areas where there is a lot of haplotypic diversity.

Church recalls an experiment she was involved in when updating the previous GRCh37 assembly to the current GRCh38 assembly. Because Church and colleagues were adding sequences into the GRCh38 reference, they knew that the sequences they were working with were not already present. So, these sequences could be thought of as equivalent to Salzberg’s missing sequences.

When Church and colleagues cut them down and aligned them to the reference, roughly 70% of these reads aligned to the GRCh37 reference using multiple alignment methods.” Church says this shows that, “just because a sequence isn’t represented in the reference doesn’t mean that it won’t align to the reference.” She adds that follow-up experiments with technology that allows the separation of sequences into haplotypes will yield more robust data.

When the reference genome was made, two haplotypes were mixed together to make a mosaic consensus. Today, however, new technology is available that makes haplotype separation possible, and some groups, such as the 1000 Genomes Project’s structural variation select SV) working group, are starting to focus on this aspect of genomic analysis.

One method that can separate haplotypes is long-read sequencing, like the sequencing technology developed by Pacific Biosciences select which is currently undergoing an acquisition by Illumina) and Oxford Nanopore Technologies. A single read comes from a single molecule of DNA, which will be a single haplotype. With multiple long reads, haplotypes can likely be separated because there are enough haplotype-specific single nucleotide polymorphisms select SNPs) that overlap between reads.

Another method is “linked reads,” a new sequencing technology developed by 10X Genomics in which multiple short reads from the same molecule select same haplotype) are tagged with the same barcode. Therefore, it is known that they came from the same haplotype.

Yet another method is Hi-C based scaffolding. It is being developed by companies such as Dovetail Genomics and Phase Genomics to determine 3D chromosome structure. These relatively new techniques can be used together as well.

Genomic diversity

Salzberg opines that the lack of diversity in the reference genome is a problem, the size of which “depends on what you’re using the reference genome for.” Much of what we use it for is to determine genetic causes of disease and/or cancer. And so long as researchers are studying a person with a similar genetic background to the reference, using the reference genome this way is fine. But “at least when looking at the African pan-genome, there is quite a lot of variation that’s missing.”

Geneticist Nathaniel Pearson, PhD, the founder of Root, a company that rewards blood and marrow donor volunteers with insight from their own HLA genes, agrees that the reference genome has limitations. “Since we use the reference genome for several different things, and we’ve long been using a single reference genome to try to do all of them, [it] ends up doing none of them ideally,” Pearson says.

Documenting the extent of human genome variation is an ongoing challenge. For years, the genomics community relied on SNP analysis because that is what the technology allowed. But over the past decade, researchers have cataloged large chunks of DNA that are present or absent in different people—so-called copy number variations. A great deal of the genomic variation in the human population may reside in these larger structural variations—insertions and deletions select “indels”) of thousands or tens of thousands of bases. As Salzberg says, to fully understand those differences, you need to sequence more genomes.

For future reference

What, then, is the fate of the reference genome? Salzberg argues in the Nature Genetics study that “a single reference genome is not adequate for population- based studies of human genetics. Instead, a better approach may be to create reference genomes for all distinct human populations, which will eventually yield a comprehensive pan-genome capturing all of the DNA present in humans.” He tells GEN that making reference genomes for distinct human populations’ genomes is not only the best solution, but that it will likely be the solution. It is, says Salzberg, “just a matter of time.”

Salzberg believes that for a study of the genetic propensity of any disorder, researchers should have a reference genome “from a normal, healthy individual from that population.” But what is a normal, healthy person? Pearson thinks it is a fool’s errand to try to make a “healthy only” reference genome for many reasons. For example, many genetic variants have varying penetrance, and a DNA donor could be healthy at the time of donation, only later to be diagnosed with cancer.

Deanna Church says that a solution is more complicated than just making more reference genomes, but fully supports collecting more sequences from diverse populations. However, admixture select the introduction of new genetic lineages into a population) is another complication to consider. If an African American is 50% admix European and West African, which reference genome would be most appropriate to use?

Another hurdle is determining how to efficiently compare a sequence query to a large set of diverse reference genomes. Salzberg and his former student and current JHU colleague Ben Langmead, PhD, an assistant professor of computer science, developed Bowtie in 2009—an algorithm used to align large quantities of shotgun sequencing data to the reference genome.

Rather than think in terms of a simple linear reference genome, Church says that many genomics researchers favor something called a variation graph representation. Variation graphs are DNA sequence graphs that represent genetic variation, including large-scale structural variation.

Last summer, Erik Garrison, a PhD student in the lab of Richard M. Durbin, PhD, at the Wellcome Trust Sanger Institute in Cambridge, UK, published a multipurpose toolkit in Nature Biotechnology of computational methods to perform genomic analysis using a variation graph as a reference named “vg.” This approach is the beginning of people having the tools necessary to do these analyses on their own. But not everyone thinks that the graph structure will succeed, and Church admits that it is still too early to see if the graph approach will catch on.

To solve the limitations of the reference genome, Pearson’s preference would be to create an ancestral reference genome. “At every spot on every chromosome, the letter that we [would] write at that spot is the letter shared by the last common ancestor of all the human copies today,” Pearson explains.

In principle, this would create a universal coordinate system for every person’s genome. An ancestral reference genome, Pearson continues, has several advantages: it is ethnically equitable, allows the inference of whether a piece of DNA is an insertion or a deletion, and removes the issue of the ideal health status of reference genome donors. Salzberg says the approach is interesting from an evolutionary point of view, but of limited value, he argues, for understanding the genetic causes of disease.

With new sequencing technology and analysis software, filling in the missing gaps in the reference genome will be easier. For example, in October 2018, Pacific Biosciences unveiled the most contiguous diploid human genome assembly of a single individual to date. Pearson notes that the best reference genome for any given person is their own. With the falling cost of genomic sequencing, perhaps the day is coming where our reliance on a reference genome will no longer exist.

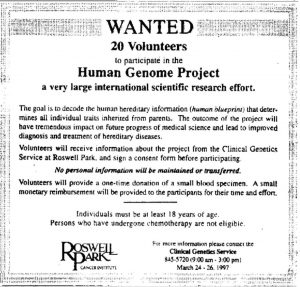

Whose DNA Makes Up the Reference Genome?

In early 1997, master DNA library builder Pieter J. De Jong, PhD, placed an ad in a Buffalo, NY, newspaper seeking volunteers for the Human Genome Project. These anonymous genome pioneers provided the DNA from which the reference genome was assembled a few years later. At about the same time, J. Craig Venter, PhD, and colleagues at Celera Genomics assembled their own genome from five major donors of different ethnic backgrounds, including select it was later revealed on national television) Venter himself.

In retrospect, the Human Genome Project’s recruitment strategy seems insufficient. “Before the human genome project started, our understanding of diversity in the population was very limited and simple,” Deanna M. Church, PhD, tells GEN.

The makeup of the reference genome was not published at the time it was released, so the origin of the DNA was anyone’s guess. In subsequent years, the guesses ventured likely reflected the awareness that genome-wide association studies select GWAS) are primarily conducted on European populations. Recent analysis indicates that although the reference genome is composed of DNA from several different contributors, the largest single donor was a single African-American individual, known as RP-11.

The first formal acknowledgement of this result did not appear in the literature until the release of the Neandertal genome. Appearing May 2010 in an article published in Science, this genome resulted from work led by Svante Pääbo, PhD, an evolutionary geneticist at the Max Planck Institute for Evolutionary Anthropology, and David Reich, PhD, a geneticist at Harvard Medical School. Buried in the article’s 150-page-long supplement was an analysis indicating that about 70% of the reference genome was contributed by RP-11, an individual who appeared to be of half African, half European descent. select That same report also noted that a small percentage of the reference came from a person of 100% Chinese descent.)

The strategy used to compile the reference genome wouldn’t suffice today. A new strategy would likely reflect what has been learned since the completion of the Human Genome Project, including, Church suggests, a different approach to consent. Of course, now that direct-to-consumer genetic testing kits are available, the notion of genetic privacy has changed completely.Ideally, Church adds that future reference genome projects would take extensive health information from diverse donors.