March 1, 2011 (Vol. 31, No. 5)

More Sophisticated Instruments Streamline Practices but Introduce New Challenges in the Process

This isn’t your advisor’s LC-MS. It’s faster, more specific, more sensitive, more reliable, more automatable. It’s the go-to instrumentation of choice for bioanalysis and drug discovery. Yet, more sophisticated instrumentation brings with it more challenges, and leaves you to struggle with more difficult tasks, like how to use it for (top-down) proteomics; to mitigate—or at least measure—the effect of the matrix; to do both quantitative and qualitative on the same instrumentation; to get your runs even faster, or automate the process; or to figure out what that contaminant in your drug actually is.

The advent of mass spectrometry has allowed scientists to find miniscule quantities of a biological substance, even as liquid chromatography runs became abridged. “That’s important if you’re trying to look at compounds that are dosed at very low levels,” such as inhalants that are designed not to have any systemic exposure, says Robert Plumb, senior manager in the pharmaceutical business operations division at Waters.

Yet compounds in urine, plasma, and other biological matrices once removed by long, elegant chromatography runs now compete with the analyte for the mass spectrometer’s ionizing source—a phenomenon termed ion suppression. That can lead to irreproducible signal from the sample, and hence, a less robust assay, unless the matrix effect can be tamed and quantified.

To determine the matrix effect, which is required for assays in support of regulatory submissions for preclinical and clinical drug applications, scientists would typically have to reconfigure the plumbing of their mass spectrometer. Measurements would have to be taken of combinations of different samples—analyte (with or without an internal standard), a solvent blank, and the matrix itself—injected through the mass spectrometer both through the LC column as well as postcolumn, and retention times determined for each. This data is then combined through an algorithm in internal or external software to determine to what effect the matrix is suppressing or enhancing the signal of the analyte.

Waters has introduced new instrumentation—Xevo TQ and Xevo TQ-S—with IntelliStart technology, “which enables us to automatically mix different samples within the chromatography system without having to disconnect the system, make changes to the plumbing of the system, or reconfigure the system,” Plumb explains. Software built into the system crunches the data, determines the matrix factor, and incorporates it into the instrument operations.

Of course, it’s always best to minimize matrix effects in the first place. At the end of last year, Waters introduced the Ostro 96-well plate with a special membrane that extracts phospholipids—one of the principle causes of ion suppression—from plasma and serum. The company also offers the Acquity UPLC system, which can generate high-resolution chromatography and work at different pHs, says Plumb, “enabling you to modify the chromatographic separation to minimize the interference of any of the endogenous materials with your analyte of interest.”

Oh, The Pain of LC-MS

Joan Stevens avoids some of the difficulties seen with sub-2 µm columns in the ultra-high pressures of UPLC—in particular the increased tendency to plug with “dirty” samples—by running reversed-phase LC through Agilent Technologies’ superficially porous Poroshell columns.

“It has mass transfer such that it acts very much like a sub-2 µm particle LC column, but it doesn’t have the back pressure associated with it,” she says. The efficient mass transfer equates with faster analysis time, allowing for more samples in a shorter amount of time—yet with “optimum resolution so you have selectivity between your peaks of interest.”

Stevens, a sample-preparation applications scientist at Agilent, works on detection of multisuite medications by LC-MS/MS—a relatively new field. With nearly 100 million people of the United States’ steadily-aging population reliant on a broad range of therapeutics, the demand on the clinics that manage those patients is huge and steadily increasing, she points out.

Urine is routinely tested to make sure patients are not abusing the substances they’ve been prescribed, as well as to make sure they’re complying with their regimens. To meet that burden, the accredited laboratories that analyze these samples day to day require “less expensive, faster, more accurate and reproducible methods for monitoring the presence and concentration of pain-management medications.”

The urine sample is cleaned up off-line by solid-phase extraction using Agilent’s Bond Elut Plexa™ PCX prior to injection into the LC. The amide-free cationic-exchange resin removes neutral and acidic compounds, yet has minimal binding sites for endogenous interferences, reducing the matrix effect by allowing concentration of basic analytes such as most pharmaceuticals and their metabolites.

Plexa beads are polymeric and can therefore, be dried, and use a very small bed mass—which “minimizes the amount of solvent that you need to use and also quickens the sample-prep portion of the cleanup from the urine sample,” Stevens notes. “And the lovely part about this is that it can be automatable…when those samples come in they can actually be placed on an instrument and then be transferred right over to the LC-MS.”

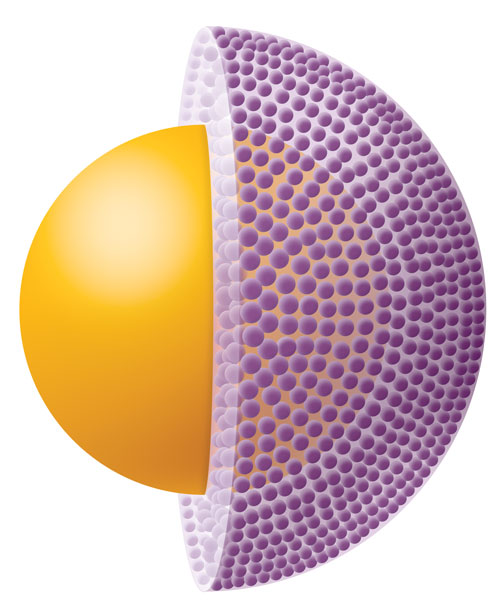

Agilent’s Poroshell 120, 2.7 µm particles have a 1.7 µm solid silica core with a 0.5 µm porous outer layer, which offers the benefits of sub-two-micron performance in terms of high resolution and high-speed separations.

Put the Top Down

When analytes are large molecules like proteins, different techniques are called for.

Proteins are more difficult than peptides to separate by standard LC methods, and more difficult to detect by MS. Thus, proteomics traditionally starts with the digestion of proteins into 10 or more peptides, which are then separated by LC and detected by MS, explains Evert-Jan Sneekes, nano LC specialist at Dionex. The typical samples have several thousand peaks—it’s possible to detect only certain portions of these peaks—and with smart search algorithms they can identify the proteins.

Yet assume that two proteins differ only by a post-translational modification (PTM). After digestion there would be no way to tell that there were two different starting species, unless the modified peptide and its unmodified analog are detected within the mixture of several thousand peptides.

Sneekes has developed a top-down approach to doing proteomics, using a two-dimensional, capillary-scale LC method, combining size exclusion and monolithic reversed-phase LC capable of handling a wide range of sizes, to separate intact proteins. “This keeps all protein information within one molecule and does not multiply sample complexity.”

Of course, there are various technical challenges (or else everyone would be doing it) to doing large scale top-down proteomics, Sneekes explains. There are several steps, and all of them need to be optimized both individually and to work together.

It’s necessary to have a 2-D LC workflow that is easy to use, gives good performance, and is of the dimensions to interface directly with the MS. The MS needs to be able to deal with molecules on a scale of up to 100 kD or even higher. Databases need to be developed and searches optimized for proteins. “And all of these bits and pieces have to be designed to work for the samples—for the proteins in this case. That’s the major development going on now.”

A simplified representation of the difference between top-down and bottom-up proteomics: In the top-down approach only two peaks appear and have to be identified to differentiate between the species. In the bottom-up approach there will be seven peaks, of which five cannot differentiate between the species. Only detecting the modified peptide and its unmodified analog will allow differentiation. [Dionex]

The Great Unknown

Identifying small molecules can be a challenging task as well. In the manufacture of pharmaceuticals, any impurities or degradants found above a certain threshold level—as low as 0.05%, depending on the daily dose and genotoxicity—must be identified.

Arindam Roy, a senior analytical scientist at Boehringer Ingelheim Ben Venue Laboratories, specializes in structural elucidation of impurities in pharmaceuticals. “The first thing we do is chromatography on the front end, to make sure they’re separated from any other components that we are not interested in,” he says. “Then we get this into MS.”

When dealing with potentially genotoxic compounds, the FDA wants manufacturers to be able to detect and identify down to 1–20 parts per million. The low detection limits of such contaminants means utilizing the power of accurate mass MS, sometimes combined with other even more specialized techniques.

“When you are trying to elucidate structure you want to go beyond one MS—fragment your molecule and get accurate mass, again, from the fragmented parts as well.” This can be done repeatedly up to LC-MS10, but after MS4 or MS5 you lose signal, he adds.

LC-MS spectral databases are of little use in identifying compounds, because different instruments yield different qualitative spectra, “so the statistical matching is a very difficult process in the LC-MS world.” Vendors may try to see what compounds have the same accurate mass and narrow down the search that way. But when you have no knowledge of what that structure would be, “organic chemistry is your best friend,” Roy says.

“You look at the process and see what structures are possible in this process, and then you get the mass specs and you see, out of all the structures, what this is matching.” The elucidation protocol may not end there. To confirm the identity of the contaminant, Roy sometimes resorts to deuterium-exchange experiments, LC-NMR, and other exotic techniques.

Qual-Quant

Traditionally, scientists have had to choose between the high resolution and selectivity of an accurate mass instrument used for qualitative mass spectrometry, and the sensitivity, dynamic range, and speed of a nominal mass instrument preferred for quantitative work.

The AB Sciex TripleTOF™ 5600 offers the best of both worlds with no need to compromise, according to Gary Impey, the company’s director of pharma and CRO business. “Because of the speed and sensitivity, along with high-resolution accurate mass capabilities, the instrument can do the qualitative workflows quite easily, but additionally does triple quadrupole-like quantitation as well.”

“Within a single analysis you could be doing high-throughput relative quant on the parent drug, while at the same time getting identification of the major metabolites.”

To accomplish this, AB Sciex started with “the front end of our 5500 platform—the QTrap 5500, which provided enhanced sensitivity.” The ToF Analyzer™ was added to the mix, “to deliver the high resolution at the speed and sensitivity required for high-end triple quad-like quantitation,” he explains. To top it off, a 40 GHz multichannel TCD detector provides the highest sampling speeds and maintains high resolution, even at low mass. “This is about ten times faster than any other detector on the market today,” extols Impey.

The AB Sciex TripleTOF™ 5600 System integrates qualitative exploration, rapid profiling, and high-resolution quantitation workflows on a single platform, the company reports.

Equipment Grant Program for LC-MS Research

PerkinElmer says it will award up to $1,000,000 worth of equipment grants during 2011 to scientists pursuing improvements to environmental and human health. The company expects to work closely with the researchers over the life of the program beyond the initial awarding of funds. This grant opportunity will be focused on researchers that require the use of liquid chromatography mass spectrometry.

To learn more about the program and to submit an application and abstract online, please go to www.perkinelmer.com/grant.