Bioprocessing 4.0 is all about digitalization and how it can streamline the analysis and multidirectional communication of data—be it between equipment, among unit operations, or across groups and stages of development. Many of the concepts behind Bioprocessing 4.0 may already be familiar. They echo the concepts behind Industry 4.0, the “Fourth Industrial Revolution.” These concepts—connectivity, intelligence, and flexible automation—have appeared frequently, if disjointedly, in general discussions about business and how it might transform industrial manufacturing.

Despite the challenges they pose, Industry 4.0 concepts are beginning to penetrate various industries. However, pulling the concepts together and making them work for the complex world of biomanufacturing may be particularly difficult.

“Difficult,” we should hasten to add, doesn’t mean “impossible” or “not worth the trouble.” In fact, Industry 4.0 concepts are winning over many experts in biomanufacturing. Several of these experts were consulted for this article. To advantage our readers, these experts are sharing their insights here and now instead of holding onto them until upcoming meetings are held.

Digitalization makes change sustainable

Digitization—the conversion of analog to digital representation—and digitalization—the utilization of digital data and technologies—are hot topics throughout the industry. This is true in R&D, supply chain management, and even patient engagement programs. When digitization and digitalization come to manufacturing, they become “more complex, less understood—especially in biopharma,” says Bonnie Shum, senior engineer, Global Biologics Manufacturing Science and Technology, Genentech.

There are four main stages of data analytics that can be used in biomanufacturing—descriptive, diagnostic, predictive, and prescriptive. The industry is for the most part in the predictive analytic phase, forecasting and understanding the future, and identifying potential failures in processes and equipment before they happen. How is this done?

Shum cited as an example a 2003 case study about chromatography transition analysis. Genentech revisited the study to explore how its data could be used to monitor column health and integrity. Genentech compared and evaluated algorithms and calculation methods head to head, integrating a variety of quantitative criteria. The company had clear goals in mind whether the focus was on robustness or sensitivity.

Genentech expects digitalization to sustain progress in data analytics. “Integrating it into our automation, our IT system, that will give it a place, a home, so then it’s not just lost in some Excel file somewhere,” she explains. “You need to assure that there is infrastructure in place that is flexible enough but also fit for purpose.”

The other part of the equation (so to speak) is “the host part,” Shum points out. That means making sure you clearly define rules and responsibility. Reviewing matters such as ownership and accountability helps reveal gaps in a workforce’s skill set. “That’s something,” she maintains, “most companies have learned with change management.”

Propagating information from development to manufacturing

“Whenever you have digitalization efforts, it’s usually less about technology and more about people,” says Oliver Hesse, director, Lab Automation and Data Management, Biological Development, Bayer Healthcare. “[It’s about] culture, change management, and building something that the end user can use.”

Hesse says that he wants to be able to “get from process development to commercial manufacturing in a more digital and smarter way.” It used to be, he points out, that people in one silo would develop a process, write a report, and “throw it over the fence to the people that would do the manufacturing.” Then the people responsible for manufacturing would have to interpret the report and program their own machines. It was a highly manual, highly analog process, requiring a lot of questions back and forth, and it was not very amenable to automation.

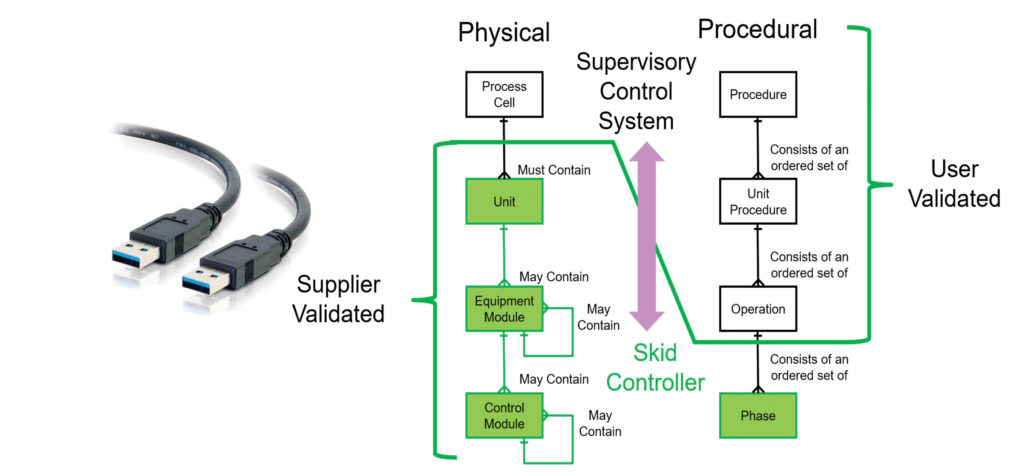

A process in development can be represented in software as a machine-readable recipe. And reusable manufacturing actions can incorporate standardized descriptions of what actually constitutes a certain unit operation or a certain piece of equipment. “We can use that information and propagate it throughout process development and up to manufacturing,” says Hesse.

To do so, it’s necessary to have standardization, including an alignment of terms, across the industry. “If we propagate information [such as the description of a unit operation or a piece of equipment] through multiple systems, we need to have a concept of what this actually means,” Hesse explains. There should not be any ambiguity about what constitutes a process.

A chromatography process, for example, should be defined to indicate whether it encompasses individual actions such as load, eluate, and wash, or whether it can accommodate specified inputs and outputs—as well as the degree to which the inputs and outputs may vary. Hesse offers another (and possibly simpler) practical example: the designation of Day 0 versus Day 1 in cell culture. “You have to have some conventions around those,” he advises.

Sample, analyze, record, iterate, adjust

The transformation to a well-understood and optimally controlled process begins with the generation of process data in an automated fashion, says Paul Kroll, PhD, business development manager, Lucullus PIMS, Securecell. The result should be a digitalization in which the data—not just data from a single unit operation, but also data from previous unit operations—are used to provide feedback and control of the bioprocess.

To accomplish this, system integrator Securecell leverages two products. The first is a liquid handling system that transfers sample from reactor to analyzer in a fully automated fashion. The second is a data management software package. The company also executes an analytical plan, Kroll notes, to “handle the samples … trigger the analyzers … record the data … and use the data to control the bioprocess.”

In combination, the two products take advantage of a massive driver list. The drivers, which are compatible with 80% of devices on the worldwide market, allow disparate systems to function together. For example, data from one vendor’s off-gas analyzer and data from another vendor’s permittivity probe were collected and used to control a third vendor’s pump. “The final result,” Kroll remarks, “was an improved process.”

Kroll affirms that labs often combine instruments from different vendors. These instruments may have been purchased at different times, for example, or for different initial projects. Also, some instruments may be shared. Sharing an expensive device such as a Raman spectrometer might require users to fit it into different setups from week to week.

The use digital data and process analytical technology (PAT) to allow for the control of bioprocessing is, for Kroll, almost the definition of Bioprocessing 4.0.

Whisper in my ear while we dance

The biopharmaceutical industry is moving to single-use technologies, and with this move comes a desire for more flexible, modular, and agile with respect to process design and systems integration. The realization of this desire can be facilitated by a “ballroom operations” concept, in which equipment in a large, open space can be moved and reconfigured as needed. The ballroom, or dance floor, concept “works very well with mechanical connections,” notes Pietro Perrone, automation process engineer, Cytiva.

Generally, when new pieces of equipment go into operation, or go into operation in a different configuration, the software allowing them to communicate is customized. If the software hasn’t been prequalified, it needs to be qualified and validated with the equipment online.

“[An integration project] becomes very difficult to do with automation,” Perrone cautions. “It can go into multiple months to get just the automation done, even though the mechanical components are ready to go.”

Cytiva is a participant in a BioPhorum Operations Group (BPOG) collaboration between end users, equipment vendors, and system integrators to develop software to facilitate data sharing and systems integration. “[This software] would allow an end user to select pieces of equipment that they can essentially plug in to their process and do the minimal amount of qualification and validation,” explains Perrone. For example, the software could allow a user to more easily switch to a larger bioreactor or even a bioreactor from a different supplier.

A proof-of-concept simulation—during which the BPOG collaborators met their milestones—“allowed us to realize that we needed to develop some standardization,” Perrone states. The collaborators, he continues, also realized that it would also help “to have a standardization body to assist us … to publicize and incorporate this into the standards.”

Paradoxically, standardization of the small components can help with flexibility in the overall scheme. “Including standardization in your process will allow you to improve data capture and analysis and, eventually, process optimization,” Perrone maintains. “We are going to develop flexibility in automation that currently exists in the single-use technical equipment from a mechanical perspective. We want the two to be aligned.”

Holistic modeling

Taking a holistic view, seeing disparate pieces of equipment and sequential operations form a unified model of production, will highlight all the necessary interconnections and enable optimization of the entire production line, reasons Marianthi Ierapetritou, PhD, Gore Centennial Professor, Department of Chemical and Biomolecular Engineering, the University of Delaware. It can lead to many benefits including better synchronization of operations.

What is missing by modeling individual units is the development of flowsheet models that can capture realistic conditions in manufacturing and allow for investigation of a variety of different scenarios. Ierapetritou argues for the use of a “digital twin”: a virtual construct of a physical system that completely mirrors the physical system. It’s not just a simulation, though, because it has to be interconnected with the physical system, with back-and-forth communications between the two.

“Digital twin frameworks are being implemented across various industries for simulation, real-time monitoring, control, and optimization to handle ‘what if’ or risk-prone scenarios for improving process efficiency, safety analysis, maintenance, and decision-making,” Ierapetritou notes.

Such frameworks may be especially helpful in biomanufacturing, which is based on generating products from living organisms. But the frameworks will need to accommodate mathematical complexities that reflect the uncertainties of cell culture. “Being able to extract the necessary information and integrate the critical parameters of the process, while maintaining the necessary details, will be game changing,” she predicts.