Science is a collaborative, data-sharing enterprise. If it is to thrive, it must allow far-flung researchers to take advantage of web-based data infrastructures. Data must be shared over the internet, and large data sets must be stored on web servers. But sharing and storing data online, especially clinical or proprietary information, raises the risk of cyberhacking. Data can be stolen or ransomed for millions of dollars.

The pharmaceutical industry, which amasses both intellectual property and patient data, is a prime target, especially as companies continue to move more sensitive data online where cyberhackers can gain access. Not only are research and development organizations at high risk, but so are medical facilities, which host sensitive patient information.

The healthcare industry was faced with this reality during the COVID-19 pandemic. As resources became strained and telehealth appointments became common, security issues at hospitals and medical centers were exacerbated. In 2020, cyberattacks on healthcare facilities more than doubled, leaving millions of patient records exposed. One group of cyberhackers collected over $123 million in ransom payments just that year.

Despite these risks, data sharing and data storage remain essential to progressing science and medicine. And these tasks must be accomplished while data stays secure.

Assessing the risks

“The risks are significant,” said Brian Bissett, an IT specialist at the U.S. Department of the Treasury’s Bureau of Fiscal Service. (He is not an official spokesperson for the Department of the Treasury’s, and the opinions he offers here are his own.) “One of the problems is that it doesn’t take a lot of expensive equipment or a huge R&D cost to do a significant amount of damage. All it really takes is an intelligent person who’s very persistent. And with a minimal amount of equipment, a lot of times with a home PC and internet access, they can do a great deal of damage.”

In the case of the cyberattacks on healthcare facilities in 2020, to gain access, many of the cyberhackers exploited vulnerabilities such as network endpoints. These are devices like laptops or tablets that can serve as entry points when they are not properly secured.

Data can be stolen both when it’s being moved from one point to another or when it’s at rest. The more times it’s transferred, especially to an external network, the more opportunities cyberhackers have to steal or modify it. If it’s at rest and not properly secured, cyberhackers can easily find and take it.

But there are many ways data can be protected whether it is in transit or in storage. And if different types of security methods are layered, stealing data becomes incredibly difficult and time consuming, which means the risk of successful cyberattacks becomes extremely small. For instance, Bissett estimates that data encryption alone is over 90% effective, but adding in other protection methods, such as tokenization or limiting who has access, can increase your protection even further.

The goal is to eventually advance cybersecurity methods so that cyberhacking becomes impossible. “We’re not there yet,” Bisset admits. “And part of the reason we’re not there yet is it’s so complex. There are so many combinations and permutations of the elements that go into security—elements involving the system, the software, and the people. Every single one of those is a potential path and a vector that can be compromised.”

Protecting shared data

Although it’s not yet impossible to steal data, it can be made extremely difficult through various security methods. Today, there are many online repositories where researchers can store and share data. Most have basic security measures such as link expiration, encryption, two-factor authentication, and granular permissions where access is granted to specific information or data sets within a system.

These methods are effective, says Manu Vohra, managing director of global life sciences at Box, a cloud-based content management company. He adds, however, that these methods are the bare minimum, especially when highly regulated intellectual property and research data are involved.

“Cross-institutional research is critical to leveraging the right building blocks without recreating the wheel,” Vohra points out. “The security controls in IT platforms are largely a factor of what collaboration tools they have in place today, and that may or may not be suited for high-value intellectual property, data, or content.”

For these, additional security features are needed to protect sensitive data while it’s being stored or shared with collaborators. Vohra recommends using artificial intelligence–based anomaly detection, which can be used to identify abnormal behavior within a data set, and automated regression testing, which can prevent working code from losing its function when it’s modified.

Box also provides deep learning–based malware detection (which can find and contain sophisticated malware such as ransomware) as well as dynamic multilayered watermarking (which can prevent data leaks and deter unauthorized resharing of information). “We provide,” Vohra asserts, “a built-in security architecture by design, with all the security controls needed today by heavily regulated industries such as life sciences.”

Tailoring security to researchers’ needs

The healthcare industry, which includes medical research organizations and pharmaceutical companies, has adopted regulations for data storage and sharing. For members of this industry, a data repository that takes these regulations into account can be an incredibly useful tool.

A research data management platform called Globus has been created by the University of Chicago to do just that. The platform, which operates as a nonprofit service, has been designed to balance security and usability. It can manage data at scale and support collaborations both within and across institutions. In addition to traditional security features, the platform can provide features that are tailored specifically to the needs of biomedical researchers.

For instance, Globus enforces encrypted data transfers and provides detailed audit logs for sensitive research data, but it also allows users to log in with their institutions’ credentials. This makes it easier to set up secure collaborations and data sharing by leveraging the identity management and credential lifecycle at institutions.

It also provides different levels of security depending on the sensitivity of the data. If an institution indicates that its research has strong regulatory requirements, such as the Health Insurance Portability and Accountability Act, more security elements are automatically added that the institution can then fine-tune based on its needs.

“For highly protected data, we essentially apply additional authorization and access controls along with audits,” said Rachana Ananthakrishnan, executive director for Globus. “You can use the same tools and have a similar user experience, but if you’re operating on protected data, then we increase those protections. You’re logging in with stronger credentials. You’re not allowed to share anonymously.”

Globus is also dedicated to findability, accessibility, interoperability, and reusability—in other words, the FAIR data principles. By adhering to these principles, researchers can more easily accelerate research, boost cooperation, and facilitate data reuse. But when large amounts of sensitive data are involved, such as with large medical studies or drug development, making data findable and accessible, while also keeping it secure, can be a challenge.

One dimension to enabling this is Globus Search, which allows secure data discovery with fine-grained access control on metadata, which consists of information about other data. Consider this example: A cancer research project publishes its metadata. By examining this metadata, other researchers can evaluate whether the project contains data that might be applicable to their studies.

“Researchers don’t see any privileged information, but they know enough that it’s worth their while to get the data,” Ananthakrishnan notes. “Although often overlooked, data discovery is a critical enabler for sharing and access. And when you have large-scale data without a discovery mechanism, it’s going to get lost.”

Encouraging open science

Some companies want to go further and make data available not only for researchers within a certain content management platform, but for the whole world. “Sharing data helps science go faster,” insists Leslie McIntosh, PhD, CEO of Ripeta, a software company promoting open science and integrity in research. “We’ve seen that with COVID-19. More information was shared more quickly, and science progressed at a speed that I’ve never seen before.”

“Communications on the importance of sharing data and open science were already happening,” she continues. “The pandemic came at a time when we had science that had not quite matured but was ready to be put out publicly where scientists could act on it.”

Ripeta recommends that trusted data repositories should be used to share sensitive data, and that researchers should make their data available to the masses whenever possible. In addition to accelerating discovery, sharing data provides transparency, which builds trust between scientists, institutions, and the public. It also lets scientists attempt to reproduce the results of previous studies, which is a fundamental principle of scientific research.

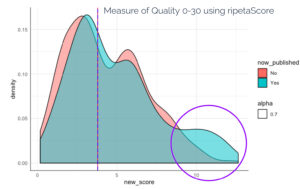

For these reasons, Ripeta takes data availability into account when it develops online tools for evaluating the quality of research articles. Articles that provide access to data, or list legitimate reasons for not publishing data, are considered to be higher in quality. By providing publishers, funders, and researchers with tools to check the quality of their and other researchers’ articles, McIntosh hopes to demonstrate how valuable data sharing is and to encourage others to do so.

It seems that the scientific community has been rapidly moving in this direction since the onset of COVID-19. The company has found that 24% more articles provided data availability statements in 2020 compared to 2019, indicating that more authors are sharing their data publicly since the pandemic began.

This change may not come as a big surprise. The speed at which vaccines to the COVID-19 virus were developed demonstrated how open science can greatly benefit the medical community. With more researchers and scientists given access to clinical and research data, diseases can be studied at greater depth, and treatments can be created faster, progressing science and medicine even further.