April 1, 2013 (Vol. 33, No. 7)

In three back-to-back papers and five pages of the April 25, 1953, issue of Nature, seven authors laid out the evidence and interpretation that established the double helical structure of DNA. As ever though, the world and science moves on.

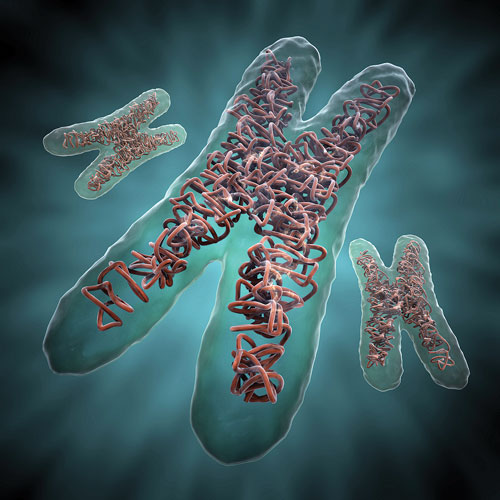

DNA, or at least our understanding of it in the context of the genome within a cell, has become much more complicated as we unravel the mechanisms that control the expression of the genes contained in that elegant molecule.

The Encyclopedia of DNA Elements (ENCODE) project aimed to integrate multiple approaches to identify the functional elements encoded in the human genome.

The September 6, 2012, issue of Nature dedicated 57 pages to six papers reporting the outcome of the genome-wide scale-up phase of ENCODE. This was published in parallel with further reports in the pages of Genome Research, Genome Biology, BMC Genomics, Science, and Cell.

The main integrative analysis paper listed more than 450 authors. Indeed, how times have changed.

The sheer scale of the data collected by the participants of the ENCODE project is slightly daunting, even to one working with it. More than 1,800 genome-wide experiments were completed, comprising the equivalent of sequencing the human genome 1,700 times over.

I think of the data as having at least two main axes. On the first it is possible to map many different types of functional regions within a cell type. For instance, chromatin immuno-precipitation and sequencing (ChIP-Seq) of different transcription factors or modified histones provides detailed maps of many different entities across the chromatin in a single cell type.

Conversely, on the second axis, a single method, say DNase-Seq or RNA-Seq, can be applied comprehensively to detect elements across many cell types.

The ENCODE project was established to utilize and integrate multiple techniques to help identify the functional elements encoded in the human genome.

ENCODE in a Nutshell

The main messages of the ENCODE extravaganza have been widely reported. To sum up: some form of recognizable biochemical activity annotates most of the genome, whether it is active transcription or modified chromatin. This suggests that at the very least the packaging and readiness for transcription of the genome is being actively controlled. These biochemical signals can be exploited to classify the genome within a cell into different states, providing genome-wide annotations and simplifying cell-to-cell comparison.

Further, the shorter regions, where specific regulatory proteins bind, are ubiquitously distributed and some locations show evidence of many proteins binding. Simulations indicate that despite analyzing more than 120 cell types by some methods, many more such regions may yet be found. Looking in human populations, there is evidence for recent evolutionary pressure on at least some of these regions, beyond what had previously been detected within mammals.

There are many more areas where ENCODE data either opens up new avenues to explore or sheds light genome-wide on older paradigms. However, to my mind the most important aspect of the project is where the ENCODE data intersects with human variation and the on-going deluge of human disease genome-wide association studies (GWAS).

The startling result from GWAS has been that the majority of associations lie either in introns or intergenic regions, and so do not have a straightforward explanation at the protein-coding level. Analyzing the locations of GWAS single-nucleotide variants in the context of ENCODE indicates that they are significantly enriched in ENCODE functional regions or function-rich genome partitions. ENCODE data clearly represents a rich source of annotation for your GWAS results.

Open Data and the Future of Genomics

As well as integrating the data from the ENCODE project, Ewan Birney, Ph.D., associate director of the European Bioinformatics Institute, and I spent some time thinking about how we could improve delivery of the results beyond the traditional published form. Open access was the starting point, but we wanted to go further than that. Since the beginning of large-scale genome sequencing, the genomics field has been at the forefront of initiatives to make research data freely available on a timely basis.

Early on, human genome project participants established the routine of releasing assembled sequences of clones within 24 hours, a practice that in my experience was often met with disbelief by potential academic collaborators.

However, the principle of rapid pre-publication data release has since become the norm for large-scale genomics research. The ENCODE project was established as a community resource project, and provided early access as soon as the data was shown to be reproducible, according to the consortium Data Release and Usage policy.

The effectiveness of that early data release was emphasized to us when we searched for publications that had used ENCODE data and were able to identify more papers already published from outside the consortium than would comprise the complete ENCODE publication package.

Mike Pazin of the National Human Genome Research Institute (NHGRI) continues to update this list (http://encodeproject.org/ENCODE/pubsOther.html), and a year later there were 208 extra-consortium publications. So the data from the project is certainly having an impact.

Interconnected, Open Data

With the publication of the ENCODE analysis results, we wanted to go beyond simply releasing the data and provide additional resources that would allow readers to explore the data, reproduce the analysis, and make connections between the set of papers published together.

The goal of open data use is part of the larger open-access literature discussion, where there remain substantial technical and legal considerations. On the technical side, the burden on authors is already substantial in terms of manuscript and data preparation alone. Online submission systems are already groaning under the strain and to do more with the data will require standardized formats and interfaces to be created.

Just shoehorning the 450 odd authors of the ENCODE consortium integrated paper into the Nature online submissions system was a taxing experience. Although a trivial example, my point is that most authors have neither the time nor the inclination to get to grips with even minor obstacles to open data use, so the path needs to be made as smooth as possible.

One way to advance toward reusable data now is simply to see what you can provide, and this is what we tried to do. Ewan and I were able to interest Nature in enhancing the ENCODE publications with interactive graphics for some of the main figures, and with the “thread” concept that would bring together the related science woven throughout the many papers, providing it in one place accessible via the Internet or the associated app.

In a sense, the threads constitute our own meta-review of the papers. These publication embellishments were well executed by the Nature team and were, we hope, fun and informative.

A much more important development was the provision of working versions of the computer code that was used in the main ENCODE integrative paper, which is offered up in the form of a virtual machine (VM). We had initially prepared annotated versions of our code along with the manuscript, and these acted essentially as the experimental methods.

However, in the review process we realized that we could perhaps go a step further and provide working versions of the analyses, so that anyone with a reasonable computer could directly reproduce the results, and work with the code to develop their own analysis.

As anyone who works in bioinformatics will know, moving software to a new infrastructure can be hairy, but migration to a Linux-based VM worked well because most of the analysts were using standard, open-source software and libraries.

We were able to test rapidly that everything would run and that all the required data was on board. This is not a perfect solution by any means. The VM is large (18 Gb) and expands to require quite a large footprint, but it does provide a transportable functioning distillation of our analysis methods, and I was very excited to see people downloading and using it.

In the long run, I envisage a publication environment wherein research papers are openly accessible and the data and analyses within each paper can be directly accessed for reuse across the Internet. I can imagine a future where one doesn’t just publish and forget, but each publication element contributes to a highly interconnected, worldwide research resource. Many people are already working toward this goal in different areas but a push must come from the research community to really make it happen.

ENCODE-ing the Future?

Where does ENCODE go next? The NHGRI recently funded the third phase of the project. Although I did not apply to be involved further due to other interests, I know there is a lot for them to do. Only around 10% of the estimated 1,400 human transcription factors were assayed by ChIP-Seq first time around.

More comprehensive lists generated either using additional antibodies or by epitope tagging methods will enhance the encyclopedia. Extending the broad methods like DNase-Seq and RNA-Seq in to the hundreds of cell types that have so far not been touched, especially concentrating on normal tissue and non-transformed cells, will do the same and additionally answer the criticism that is often raised around some of the cell types used initially.

Development of ENCODE style analysis in other species including the mouse (partly within ENCODE) and other model or informative species will uncover further comparative insights. Applying the same methods to differentiating or stimulated cellular models will give rich data on chromatin state changes, concomitant transcription factor binding events, and cellular outputs. These resources should be prime inputs for future computational models of human chromatin and transcription.

One legacy of the first phases of the ENCODE project has been an open data model that really worked, and I look forward to seeing that legacy grow as the project continues to deliver new and exciting information that will change the way we do biology.

Ian Dunham, Ph.D. ([email protected]), is a staff scientist at the European Bioinformatics Institute.